Objectives:

The objectives of this section are:

to introduce you to the various attribute types and the ways they can be split

to present you with an algorithm for creating a decision tree classifier

to determine the best split for a given node

to inform you of the problems that arise with the algorithm and how they are addressed.

Outcomes:

By the time you have completed this section you will be able to:

compare decision trees and decide whether or not they are efficient

explain from a high level Hunts’ algorithms and the difficulties encountered

calculate the impurity of a node by using the measures outlined in this section

compare and contrast the measures of impurity

Decision Tree Induction Algorithms

Popular Induction Algorithms

- Hunt’s Algorithm: this is one of the earliest and it serves as a basis for some of the more complex algorithms.

- CART: classification and regression trees is a non-parametric technique that uses the Gini index to determine which attribute should be split and then the process is continued recursively.

- ID3, C4.5: uses the entropy of an attribute and picks the attribute with the highest reduction in entropy to determine which attribute should the data be split with first and then through a series of recursive functions that calculate the entropy of the node the process is continued until all the left nodes are pure

- SLIQ, SPRINT: are scalable algorithms that have been proposed to deal with the issues the greedy algorithms above present.

Hunt's Algorithm

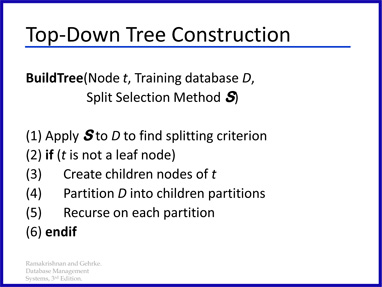

There are various algorithms that are used to create decision trees. Hunt’s Algorithm is one of the earliest and serves as a basis for some of the more complex algorithms. The decision tree is constructed in a recursive fashion until each path ends in a pure subset (by this we mean each path taken must end with a class chosen). There are three steps that are done until the tree is fully grown.

- Examine the record data and find the best attribute for the first node.

- Split the record data based on this attribute

- Recurse on each corresponding child node choosing other attributes

Figure 1 is a slide depicting a high level description of Hunt’s algorithm.

Issues that arise

There are two issues that arise when dealing with decision tree design algorithms.

- How should the training data be split? We have already seen that by using attribute selection measures we can determine the best node to split for each step.

- How should the splitting procedure stop? At what point do you decide to stop the tree-growing process? This is another crucial area that must not be overlooked. One might assume that all we need to is to allow the recursive steps play out all the way through to the end but as we will discuss in the next section this might prove counterproductive and might be fruitless.