Objectives

The objectives of this section are:

to explain the problem of rule generation

to define confidence-based pruning

to show how rule generation works in the Apriori Algorithm

Outcomes

By the time you have completed this section you will be able to:

generate rules based on frequent itemset

use the Confidence theorem presented to prune the rule set.

explain how rule generation occurs in the Apriori Algorithm

Confidence Based Pruning

For each frequent k-itemset, one can produce up to 2k-2 candidate associate rules. This starts to get computationally expensive when you have 10-itemset values. Recall the anti-monotone property from the previous section that was used to prune the frequent itemset. Unfortunately for us, in general, confidence does not have this same property but confidence rules generated from the same itemset has this anti-monotone property.

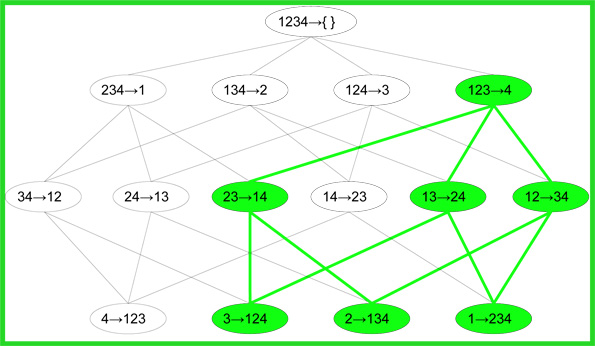

The theorem states that if a rule X→(Y-X) does not satisfy the confidence threshold, then any rule X’→(Y-X’), where X’ is a subset of X, must not satisfy the confidence threshold as well. The diagram below shows the pruning of association rules using this theorem.

Rule Generation in Apriori Algorithm

In the Apriori Algorithm a level-wise approach is used to generate association rules. First of all the high confidence rules that have only one item in the rule consequent are extracted, these rules are then used to generate new candidate rules.

For example, in the diagram above, if {134}→{2} and {124}→{3} are high confidence rules, then the candidate rule {14}→{23} is generated by merging the consequents of both rules.