Objectives:

The objectives of this section are:

to introduce you to the decision tree classifier

to explain the various parts of a decision tree

to present you with some advantages of using the decision tree classifier

to define Occam’s Razor and its application in decision tree construction

Outcomes:

By the time you have completed this section you will be able to:

to recognize the various parts of a decision tree

to list some advantages of decision tree

to identify the best tree based on Occam’s Razor

Decision Trees

What is a Decision Tree?

A Decision Tree classifier is a technique used in classification. It is otherwise known as a tree diagram. As the name suggests, the classifier looks like a tree with leaves and nodes. A decision tree uses information that we know about an item to help us arrive at a particular predefined conclusion. When we talk about data or information in a general sense we are basically referring to information that has been collected through a variety of means for the specific purpose of analysis. In data mining this information is referred to as Record Data and it can be stored in various ways in a database. It can be stored as transaction data, a data matrix or a document-term matrix.

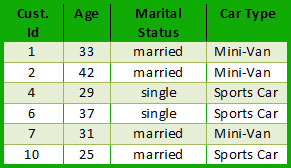

The table above in Figure 1 is a snapshot of record data from a Cougar Car Dealership.

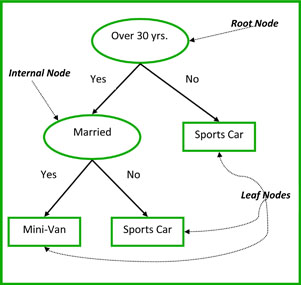

A decision tree is comprised of four parts that are required in order to classify data. Each decision tree must have a root node and at least two leaf nodes and two edges. It can also but is not required to have internal nodes which increase depending on the complexity of the data set.

Figure 2, pictured below, is a simple decision tree for the record data from the Cougar Car Dealership and it helps us classify whether a person will buy a sports car or minivan depending on their age and marital status.

Important Definitions:

A root node is one that has no incoming edges.

An internal node is one that 1 incoming edge and two or more outgoing edges.

A leaf or terminal node is one that has 1 incoming edge and no outgoing edges.

Each non terminal (root and internal node) serves as a question which helps further subdivide the item until we arrive at a particular conclusion. For instance in the diagram above, we have a decision tree where the root node breaks down the items into those who are over 30 and those under and then the internal node subdivides those over 30 by those who are married from those who are single.

Advantages of Decision Trees

Decision Tree classifiers are very popular for a number of reasons:

- Comprehensibility: the representation of decision trees are in a form that are intuitive to the average person and therefore require little explanation. Just by looking at the diagram one is able to follow the flow of information.

- Efficiency: the learning and classification steps of decision tree induction are simple and fast

- Extendibility: decision trees can handle high dimensional data and are easy to expand based on the data set and being that their classifiers do not require any domain knowledge they can be used for exploratory knowledge discovery.

- Flexibility: decision trees are used in various areas of classification including the manufacturing and production, financially analysis, molecular biology, astronomy and medical fields.

- Portability: decision trees can be combined with other decision techniques, they currently serve as the basis of several commercial rule induction systems.